Welcome back! Today we’re going to zoom around again in some odd directions, and give a roundabout introduction to the semiconductor industry, touching on some of the following questions:

- How do semiconductors get designed and manufactured?

- What is the business of semiconductor manufacturing like?

- What are the different types of semiconductors, and how does that affect the business model of these manufacturers?

- How has the semiconductor industry evolved over time?

- How do semiconductor manufacturers approach risk-taking in their strategic decisions?

This last question on risk-taking is especially relevant to the chip shortage, because semiconductor manufacturing involves a lot of expensive, high-stakes decisions with consequences that arise several years later — something that influences the choice of how much manufacturing capacity to build. (Robert Palmer, a member of AMD’s Board of Directors, allegedly compared building semiconductor fabs to Russian roulette: “You pull the trigger, and four years later you learn whether you blew your brains out or not.”)

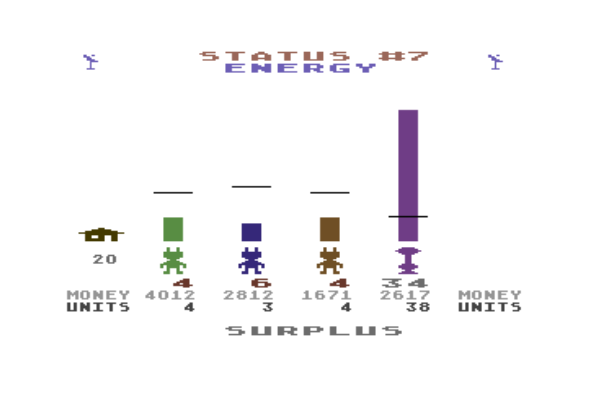

In part one of this series, I talked about how bad the chip shortage is, I presented a two-minute summary of some of the factors that led to the chip shortage, and I gave a sneak preview of some of its nuances. We looked at toilet paper shortages. And played Lemonade Stand. Yes, really.

Part two’s focus is mostly centered around a kind of 1980s-1990s “retro” theme. If you don’t remember the USSR, the Berlin Wall, Yugoslavia, or parachute pants, consider this a history lesson of sorts.

Every good history of Silicon Valley starts with how William Shockley left AT&T’s Bell Laboratories, where he co-invented the first working transistor with John Bardeen and Walter Brattain in 1947, to start Shockley Semiconductor, located in a Quonset hut in Mountain View, California, in 1956, and how the Traitorous Eight left Shockley Semiconductor and started Fairchild Semiconductor in 1957 with a secret meeting in the Clift Hotel, where they signed dollar bills as a symbolic contract.

This is not that kind of history.

Think of it, instead, as a sort of magical journey with an invisible, attention-span-deficient Ghost of Semiconductor Past, starting in 1983 — ooh, look, there’s Huey Lewis and Cyndi Lauper! — and bouncing around among the past few decades. A set of hyperactive case studies, in a matter of speaking. (This is a long article. A very, VERY LONG article — no, you don’t understand; I’ve written long articles before, but this is a record for me — which I hope is worth the time needed to read it. It could probably be four or five articles instead, but I have my reasons for not splitting it up.) We’ll be covering these sorts of topics:

- some impacts of the prevailing customer market from around 1975-1985, during the Microcomputer Revolution

- the value (or lack thereof) of certain media commentary on the chip shortage

- an introduction to semiconductor fabrication with MOS Technology and Commodore

- some concepts of microeconomics

- capital expenditures

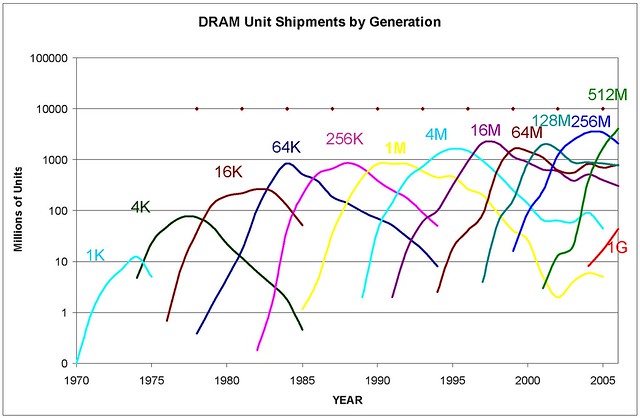

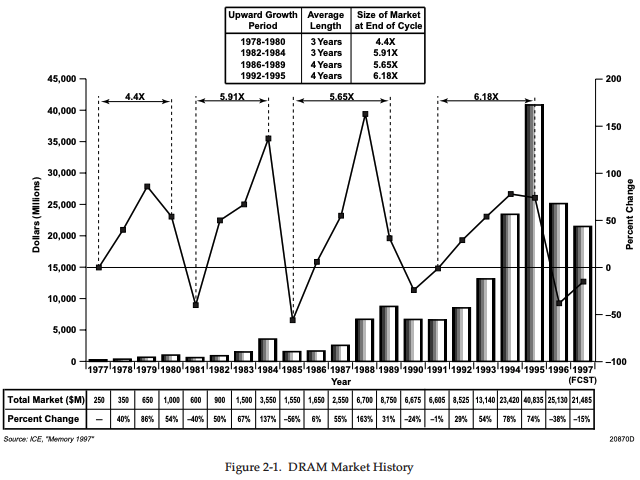

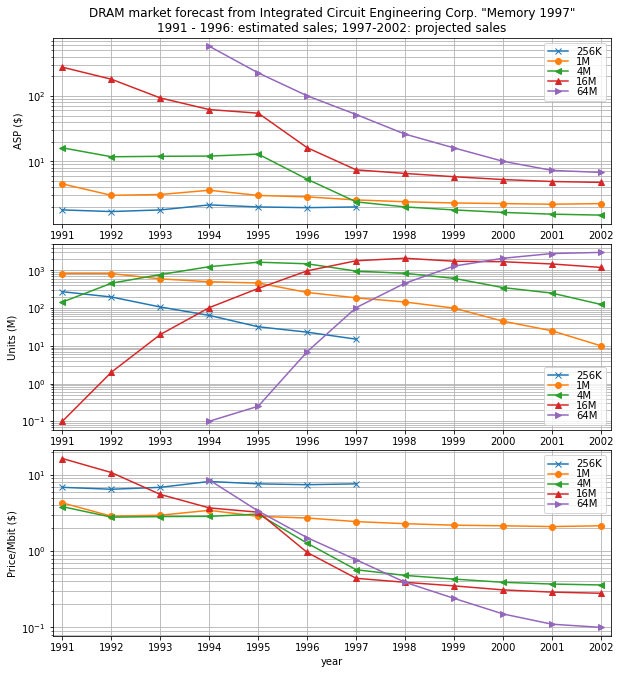

- the history and economics of DRAM manufacturing

- purchases of new and used semiconductor fabrication plants

But first, back to 1983. Why 1983? I have some personal reasons — for example, I first played Lemonade Stand in the fall of 1983 — but, more importantly, that year marked a major turning point in the rise of the personal computer. There are two pieces of evidence I’d like to share: a quantitative graph, and one little excerpt from a newspaper article of the time.

Interlude, 1983: A Brief Introduction to the Microcomputer Revolution and the Semiconductor Industry

The graph is from Jeremy Reimer’s excellent writeup of three decades of the personal computer industry in Ars Technica in 2005. The sales growth of the Commodore 64 and IBM PC in 1983 was just insane. (Go read the whole piece if you have time.)

The excerpt is from an article in the December 10, 1983 issue of the New York Times, titled Under 1983 Christmas Tree, Expect the Home Computer:

This is the year in which the home computer will join the sled and the bicycle under the Christmas tree.

In numbers that outstrip even the most optimistic predictions, Commodores, Ataris and Colecos are being snapped up from the shelves. Americans have embraced the home computer as their favorite gadget for a Christmas present, replacing the food processors and video games of Christmases past.

“Last year, computers were new, unique and expensive,” said Egil Juliussen, president of Future Computing Inc., a market forecasting concern that expects 2.5 million home computers to be sold this Christmas, twice as many as last year. “This year, they’re cheap, and they have become the gift.”

Only six months ago, a fierce price war erupted among home computer manufacturers, sending many into a tailspin from which it appeared some would not recover. This year, the industry will lose almost \$1 billion.

Price War Aids Consumers

But the same price war that badly hurt the industry has brought home computers within the reach of millions of families. At the same time, industry advertising has helped make people feel more comfortable with the notion of a computer in their home or, alternatively, apprehensive that their children will fail without them. Suddenly, the home computer glut has given way to a shortage.

So there you have it. Boom! Home computer explosion. Gluts and shortages. Sounds familiar.

1983 began with Time Magazine’s January 3, 1983 “Man of the Year” issue dedicated to the computer. This issue contained a special section on computers, including a feature titled The Computer Moves In along with articles on programming and a computer glossary. While the Man of the Year title (actually Machine of the Year) was judged based on the status at the end of 1982, I really think of 1983 as the Year of the Personal Computer. Sure, the preceding years each brought new and exciting developments:

- 1977: the Apple II, the TRS-80, the Commodore PET, and the Atari VCS

- 1978: the Intel 8086 processor and Space Invaders

- 1979: the Atari 400 and 800 and TI-99/4

- 1980: Pac-Man and the Sinclair ZX-80 and Commodore VIC-20

- 1981: the IBM PC and the arcade game Donkey Kong

- 1982: the Intel 80286 processor and Commodore 64

There was certainly a sense that The Future Is Here! and articles in various magazines and newspapers heralded a new age of technology. The New York Times had an article in December 1978 predicting that “The long‐predicted convergence of such consumer electronic products as television sets, videotape recorders, video games, stereo sound systems and the coming video‐disk machines into a computer‐based home information‐entertainment center is getting closer.”

But I stand by my choice of 1983 as the year that personal computers really took off, both in an economic and cultural sense. Here are a few other reasons:

-

The movie WarGames came out in summer 1983, and with the later September 5, 1983 issue of Newsweek about Neal Patrick and the Milwaukee 414s, we were all introduced to the subculture of “hackers”.

-

Compute’s Gazette began publication, bringing articles and free software (to those willing to type it in from code listings) to Commodore computer owners, as a spinoff of Compute! Magazine. As it explained in its premier issue:

Where is the demand coming from? Well, we estimate that Commodore is currently selling over 100,000 VIC-20s and 64s a month. Dozens of software and other support vendors are rushing to supply products for these rapidly growing markets. Personal computing power is now expanding at a rate far past that predicted by industry observers. With the recent price decreases in the VIC-20 and 64, we expect this trend to continue its dynamic escalation.

-

Electronic Arts published its first games in 1983; we’ll take a closer look at one of them.

-

In summer 1983 I went to a “computer camp” for a week where we got to try out programming with VIC-20 computers and saw WarGames at the movie theater. Later that year, my family bought a Commodore 64. (Like I said, personal reasons. Okay, you don’t really care about either of those events, but they made a big impression on me.)

-

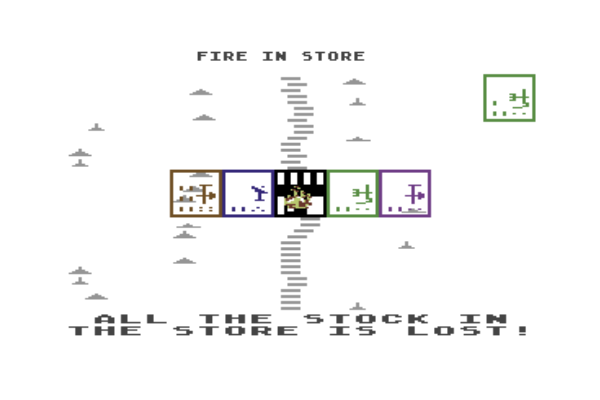

The video game crash of 1983 resulted from a tidal wave of interest in hardware and software companies racing to fill a mass demand for video games in the United States. It got filled, all right. Price wars dropped the cost of video game consoles and low-end computers like the Commodore 64 to the point where they were more affordable — good for consumers, but bad for the manufacturers. Atari was one of the most heavily-hit companies, with so many unsold games and consoles that they actually buried this excess inventory in a New Mexico landfill. The glut devastated the video game console market for two years, until the 1985 release of the Nintendo Entertainment System — and was almost entirely predictable. As Wikipedia states:

Each new console had its own library of games produced exclusively by the console maker, while the Atari VCS also had a large selection of titles produced by third-party developers. In 1982, analysts marked trends of saturation, mentioning that the amount of new software coming in would only allow a few big hits, that retailers had devoted too much floor space to systems, and that price drops for home computers could result in an industry shakeup.

In addition, the rapid growth of the videogame industry led to an increased demand, which the manufacturers over-projected. In 1983, an analyst for Goldman Sachs stated the demand for video games was up 100% from the previous, but the manufacturing output had increased by 175%, creating a significant surplus. Atari CEO Raymond Kassar recognized in 1982 that the industry’s saturation point was imminent. However, Kassar expected this to occur when about half of American households had a video game console. Unfortunately, the crash occurred when about 15 million machines had been sold, which soundly under-shot Kassar’s estimate.

Oddly enough, in 1983 there was a semiconductor shortage:

Demand, which has picked up sharply in recent months mostly because of the boom in personal computers, is now exceeding supply for most kinds of silicon chips used in computers, telecommunications equipment and other electronic devices.

At a conference here, industry executives said customers had to wait as much as 20 weeks or more for delivery of their orders. On the one hand, the shortage is a blessing for the companies, which are selling all they can make. Price-cutting has ended, profits are improving, and company stock prices have risen.

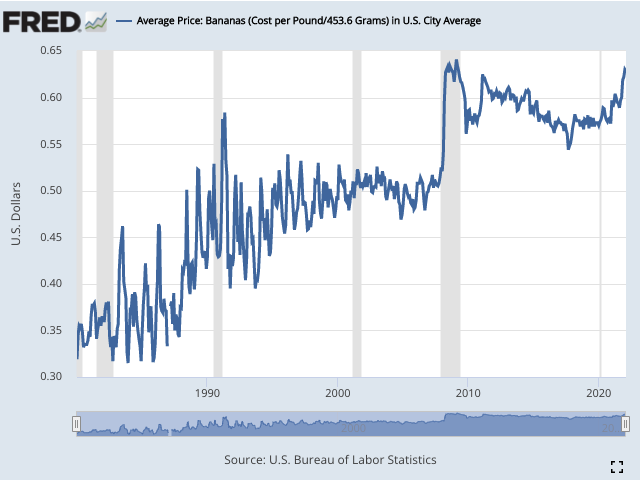

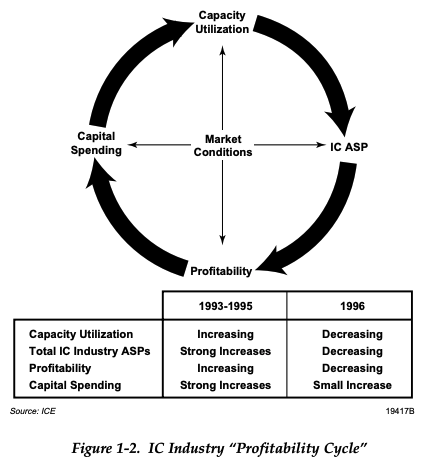

Both gluts and shortages have related dynamics, which are relevant to understanding this year’s semiconductor shortage. The computer and semiconductor industries are both examples of cyclical industries, which undergo boom and bust periods somewhat akin to oscillations in an underdamped, marginally stable control system.

Why do we get these sort of business instabilities, especially in the high-tech world, and especially if we can see them coming? Part of the answer comes from time delays, and part of it from corporate motivation to manage the pain of capital expenditures, something that becomes very clear if you look at the business of DRAM (dynamic RAM).

Disclaimers

I need to get through a few disclaimers:

I am not an economist. I am also not directly involved in the semiconductor manufacturing process. So take my “wisdom” with a grain of salt. I have made reasonable attempts to understand some of the nuances of the semiconductor industry that are relevant to the chip shortage, but I expect that understanding is imperfect. At any rate, I would appreciate any feedback to correct errors in this series of articles.

Though I work for Microchip Technology, Inc. as an application engineer, the views and opinions expressed in this article are my own and are not representative of my employer. Furthermore, although from time to time I do have some exposure to internal financial and operations details of a semiconductor manufacturer, that exposure is minimal and outside my normal job responsibilities, and I have taken great care in this article not to reveal what little proprietary business information I do know. Any specific financial or strategic information I mention about Microchip in these articles is specifically cited from public financial statements or press releases.

There you go; you’ve been informed.

One other topic I want to cover, before returning to the early days of computing, is some recent gossip, to remind us of a few very important points.

Interlude, 2021: Tech Gossip (Blame, Excuses, Prognostication, and Silver Bullets)

It’s always interesting to hear What Some People Say about the chip shortage… bad news is a time when everyone gets to weigh in on the topic, like a relative repeating gossip at a funeral. (“Oh, I heard he wrote Jimmy out of his will for dating his ex-wife, such a shame, if only he’d cut back with the cigarettes maybe he would have beat the cancer.”)

There is a lot of this sort of thing in the news lately. You need to realize that no one has a satisfying answer, so in a vacuum, here’s what we get instead, a superficial coverage of the problem that touches on half-truths, wishful thinking, and speculation.

Pat Says

Intel’s CEO, Pat Gelsinger, was quoted in an article in Fortune titled “Chipmakers to carmakers: Time to get out of the semiconductor Stone Age” that automotive manufacturers should be making ICs on more modern technology processes:

“I’ll make them as many Intel 16 [nanometer] chips as they want,” Intel chief executive Pat Gelsinger told Fortune last week during his visit to an auto industry trade show in Germany.

Carmakers have bombarded him with requests to invest in brand-new production capacity for semiconductors featuring designs that, at best, were state of the art when the first Apple iPhone launched.

“It just makes no economic or strategic sense,” said Gelsinger, who came to the auto show to convince carmakers they need to let go of the distant past. “Rather than spending billions on new ‘old’ fabs, let’s spend millions to help migrate designs to modern ones.”

And in his keynote speech at that IAA Mobility trade show: (at 17:50)

I’m also excited today to announce our Intel Foundry Services. In March of this year, I announced IDM 2.0 that we are foundering our products, but we’re also opening wide the doors of Intel to be a foundry for others’ products as well. Today, we’re announcing the European foundry services at Intel 16 and other nodes out of our Ireland facility. And we believe this has an opportunity to help expedite the end to this supply shortage, and we’re engaging with auto and other industries to help build on those, uh, capabilities.

But I’d also say some might argue, well, let’s go build… most of those auto chips are on old nodes. Don’t we need some old fabs for old nodes? Do we want to invest in our past or do we want to invest in the future? A new fab takes four or five years to build and have production-worthy.

Not an option to solve today’s crisis. Invest in the future, don’t invest backwards. Instead, we should be migrating old designs onto new, more modern nodes, setting them up for increased supply and flexibility into the future.

Lisa Says

AMD’s CEO, Lisa Su, said in an interview at Code Conference 2021

If you think about the semiconductor industry, we’ve always gone through cycles of ups and downs where, you know, demand has exceeded supply or vice versa. This time it’s different. And what’s different this time is every industry needs more, and so, you know, the confluence of that means that there is a, there is an imbalance. I will say that there’s a tremendous amount of investment going in, so, uh, there are, you know, over 20 new factories are coming online this year… and, and, you know, 20 more, you know, 20+ more in… um… uh… in planning and, um, so it’s still gonna be tight, you know, this year’s tight, first half of next year likely tight, but it’ll get better, as we get into 2022.

…

… we’ve seen, um, some stuff about automotive shortages ‘cause there were some supply chain interruptions there, so— It’s just every market has seen the demand go up, and um, and the key here with these complex supply chains is you may need thousands of chips, you know, only one of them being short is going to cause you to not ship your system, and so there’s just a lot of, let’s call it, um, mixing and matching of, of these things… But— you know, what I will say is, it will be solved. Okay, we, heh, [chuckling] the semiconductor industry has been through these things and it, it will absolutely, um, you know, uh, normalize, uh, supply to demand.

[Kara Swisher, host, interrupting] And when do you expect that to happen?

[Su] Uh, I would say it gets better next year. You know, not, not, um, not immediately, but it will gradually get better, as, uh, more and more plants come up, and it takes, you know, we’re an industry that just takes a long time to get anything done, so, you know, it might take, you know, 18 to 24 months to put on a new plant, and in some cases even longer than that, and, so, you know, these investments were started, uh, perhaps a year ago, and so they’re, they’re coming online, you know, as we, as we go through, uh, the next couple of quarters.

Elon Says

Elon Musk said, in a tweet in August 2021:

Tesla makes cars for export in first half of quarter & for local market in second half.

As publicly disclosed, we are operating under extreme supply chain limitations regarding certain “standard” automotive chips.

Most problematic by far are Renesas & Bosch.

Musk also stated in Tesla’s Q4 2021 earnings call, when asked for some “color” on the supply chain situation — Wall Street analysts can never get enough color — near the end of the call:

Elon Musk: Well, last year was chip hell of many chips, uh, so, silicon carbide inverters — were certainly one of them, but, uh, um —

Drew Baglino (Senior VP, Powertrain and Energy Engineering): Honestly, there’s a lot of annoying very boring parts.

Elon Musk: Yeah. It’s a ton of very simple control chips that, run-of-the-mill, literally, you know —

Drew Baglino: [Inaudible] you know.

Elon Musk: Yeah, basic chips to control.

Drew Baglino: Voltage references, oscillators, so they’re very boring things.

Elon Musk: Yeah, exactly. Like the little chip that allows you to move your seat back and forth. [Chuckling] That’s, actually, was a big problem.

Drew Baglino: Yeah.

Elon Musk: … the… couldn’t make seats. Um, so, I — like — but a lot of these things are alleviating. I think there’s, there’s some degree of the toilet paper problem as well, where, um, you know, the toilet paper shortage, uh, during COVID, and uh, like obviously it wasn’t really a, a suddenly, a, a tremendous enhanced need for ass wiping. Um, it’s just people panicked in order to — and got every paper product you probably, you could possibly wipe your ass with, basically. Um, and I wasn’t sure, is this like a real thing or not? I actually took my kids to the H-E-B and Walmart in Texas to just confirm that this was real.

Indeed, it was. Um, and there was, there’s plenty of food and everything else, but just nothing, no paper products, um, that didn’t cause a split up. So, um, an odd choice for people to panic about. Um, those, those things are — so, end of the world’s coming, I think toilet paper is the least of your problems.

Um, so, so, I think we, we saw just a lot of companies over-order chips, uh, and, and they buffer the chips, um, and so we should see — we are seeing alleviation in that… almost every area, but the output of the vehicle is — uh, goes with the, the least lucky, um, you know, um, what, whatever, whatever the most problematic item in the entire car is, and there’s like, at least, ten thousand unique parts in the car, so, uh, you know, way more than that if you go further up the supply chain, and it’s just — it’s just, which one is gonna be the least lucky one this time? It’s hard to say.

He Says That They Say That Somebody Says

Robert Carnevale wrote in a Windows Central article titled “The global chip shortage’s cause has been found — it boils down to one company, says report”

As reported by DigiTimes, some Taiwan-based tech manufacturers — think smartphones, PCs, and related gadgetry — have singled out Texas Instruments as being at the epicenter of the chip shortage’s widespread production pandemonium (via WinFuture). In case the name “Texas Instruments” sounds familiar to you, that’s because you very well may have used one of its calculators in your lifetime. That’s the company being accused of having a vice grip on global technology output.

This accusation is based on the fact that Texas Instruments manufactures analog chips that are essential for duties such as PC voltage regulation. Said chips are a fundamental part of much computing technology, and are in a more dire supply situation than the advanced, specialized chips the likes of TSMC and co. produce.

The aforementioned Taiwan-based sources say Texas Instruments’ inability to ramp up production capacity is the fundamental problem underpinning everything else. The question now is whether this supposed culprit identification will have any impact on the U.S. government’s shortage-combatting plans.

The DigiTimes articles — there are two of them, one mentioned above and another titled “Highlight of the Day: TI reportedly slow in expanding production”) — are behind paywalls now; I managed to stash the content from the Highlight of the Day article, back in November (and have faithfully reproduced its typographical errors here) for whatever it’s worth:

Pure-play foundry houses have been keen on expanding fab capacity, but the notebook industry continues to see serious chip shortage. Some industry sources are blaming Texas Instrument for its insuffcient supply. Networking device makers expect chip and component shortages will persist in 2022. But in the passive components sector, makers have seen demand slow demand.

IC shortage unlikely to ease until TI ramps up output: The global IC shortage is unlikely to ease until Texas Instruments scales up its output, according to sources in the notebook industry, which see supply-side constraints caused mainly by TI’s insufficient supply.

Enough of the Rumor Mill

There’s also Renée Says (Ampere’s Renée James: “I think what we thought was that we, I, I think this demand signal might have been read incorrectly in 2019 and 20 and in addition to that it uncovered, um, the fragility of the supply chain for semiconductors worldwide and the decline in U.S.-manufactured semis, which as you know is not just a supply chain issue, it’s also a national security issue for us, so, um, you know, one of the things that companies like mine, a small company, a startup only four years old, however I’ve been in this business for over 30 years, we know that there’s some systemic long-term things that we need to go after to build the health of U.S. semiconductors and that is what the Commerce Department is focused on talking with all of us about tomorrow and working on over the next five years. I mean, this is not a short-term thing, it’s something we need to get after as a national agenda.”) and Cristiano Says (Qualcomm’s Cristiano Amon: “I think in general, uh, everyone is complaining about, uh, the shortage of legacy technology and uh… but even those are gonna come online and uh… we’ll feel good about where we’re gonna be in 2022.”) and some others, but I’ve reached my limit.

This stuff is just frustrating to read, and I feel dirty repeating some of it, but I want to make a point: None of the tech gossip is really that useful.

Pat Gelsinger wants to help Intel sell its foundry services to the auto industry, and make them more resilient to capacity issues by manufacturing their ICs in fabs (Intel’s fabs, of course!) with newer technology which are seeing more investment — and we’ll talk about why that might or might not be useful in another article. Lisa Su is trying to paint a picture to reassure… but AMD doesn’t have any fabs, so it doesn’t control the situation directly; instead, it depends on foundries like TSMC to manufacture its processors. I trust she knows much more than I do about the situation, but at the same time, there’s no way to give a detailed answer without likely being wrong or sending messages to the financial world that can only get her or AMD into trouble. Elon Musk is rich enough that he can say what he wants and blame who he wants. If you want to learn something useful from CEOs, go listen to their company’s earnings calls and pay attention to concrete details that they are relating about their own company. If they’re talking about anyone else’s company, they’re no longer the expert.

As for the Windows Central and DigiTimes articles… bah!

I suppose there are shreds of truth in most of these sources, but you have to read enough in aggregate about the subject that you can distinguish the shreds of truth from what’s just a rumor, and unless there are supporting data or references to back up the assertions, they’re still just part of the rumor mill. Some say the chip shortage will be over soon, some say it will linger on; in fact, one day in mid-November of last year I spotted both back-to-back in my news feed:

The answers are all over the place. The uncertainty and confusion is enough to make you just throw up your hands in exasperation. What can you do?

I’ve been looking for good summaries of the situation for several months, and most of the time, whenever I think I’ve found something useful, in the same article or video there’s another detail that’s just totally out of left field and discredits the whole thing. Like a YouTube video (5 reasons why the world is running out of chips) that started off well, but then mentioned “minicontrollers” (are those just very large microcontrollers?) and towards the end slid into a lost-in-translation platitude that the chip-making industry “will need to keep innovating” and “will need to increase its capacity by working quicker, building square miles, and employing more people and machines.” Argh!!!

(If you do want some useful summaries, look at the links I listed near the beginning of Part 1.)

I’d like to leave the present gossip, and return to a historical look at earlier decades, instead of trying to explain today’s shortages directly. There are a few reasons. (Aside from the fact that today’s news is a bit demoralizing, whereas the 1970s and 1980s and 1990s exist at a safe, nostalgic distance.) One is that history tends to repeat itself, so we can look at a few of the past semiconductor business cycles, in their entirety, with the benefit of hindsight, instead of this mystery situation where no one really knows what is going to happen. The second is that the systems of the 1970s and 1980s are simpler to understand and explain. And the third is that I can actually find a lot of material on semiconductor history — there seems to be an unspoken statute of limitations on proprietary technology, so that if you’re an outsider and you want to know how today’s microcontrollers are designed, you’re out of luck, but people are willing to pull back the curtain and talk about the chips from the 1970s and 1980s, and all the crazy clever things they had to do to make them work.

So: the semiconductor industry. Yes.

Semiconductors! Meow!

I’m going to make the assumption that since this is on embeddedrelated.com, that you’ve worked with embedded systems, and so words like “op-amp” and “MOSFET” and “microcontroller” make sense to you. If not:

Semiconductors for People Who Are Not Electronics Enthusiasts

I think it would be easier for the average person to understand the semiconductor industry if it we just called it the microelectronics industry. “Semiconductor” makes people’s eyes glaze over, and just says something about the material these products are made of — something that conducts more than an insulator, but less than a conducting metal — not what they do, which is to control electrical signals. So there are a bunch of semiconductor manufacturers. They make chips, also known as “integrated circuits” (ICs), those little things in the black plastic packages that go in your phone or your computer. These things:

Close-up photograph of a dsPIC33CK Low Voltage Motor Control Board, taken by the author

Inside the chips are lots and lots of really small things called transistors that switch on and off and can be used to form microprocessors that run software and figure out how to get information from other computers through the air and display them on a screen as cat videos.

For most of this article, you won’t need to know anything about how chips actually work. (For the rest, you’ll just have to catch up or work around the jargon and technical details.)

Semiconductors for Electronics Enthusiasts

If you have worked on embedded systems, you’ve probably bought ICs and soldered them on circuit boards and read datasheets and schematics. Maybe even designed your own circuit boards.

You can make your whole way through a career just on that kind of information, without knowing much about what goes on inside a chip or how these manufacturers were able to make the chips, and just thinking of them as different brands of little black boxes. (I know, because I was one of those people during a good portion of my career, even after working at a semiconductor manufacturer for several years.)

If you stop thinking of the chip as a black box, and instead as a container for transistors, which has been manufactured in a factory and designed by a company which may be different than the one who owns the factory — well, then, things get a bit interesting. I think you should probably know something about these aspects, which I mentioned at the beginning of today’s article:

- How do semiconductors get designed and manufactured?

- What is the business of semiconductor manufacturing like?

- What are the different types of semiconductors, and how does that affect the business model of these manufacturers?

- How has the semiconductor business evolved over time?

- How do semiconductor manufacturers approach risk-taking in their strategic decisions?

Here’s a short version:

- Semiconductor ICs contain a “die” (sometimes more than one) which has been manufactured as part of a circular wafer.

- The surface of the die has been built up in a bunch of layers, using equipment that adds or removes materials selectively from parts of the die.

- Some of the layers are made out of semiconductors, usually altered in some way to change their electrical properties and form transistors.

- Other layers are made out of metal, forming lots of very small wires.

- The selective adding or subtracting usually happens with the help of photoresist that has been selectively exposed using light that shines through a glass mask. (The most cutting-edge process, EUV lithography, uses mirrors that bounce around ultraviolet radiation produced from tin plasma.)

- IC designers figure out what kind of transistors will fit on the die, how to hook them together, and how to test them. Nowadays everything is computerized, and specialized computer software called electronic design automation does most of the grunt work, but it wasn’t that way in the 1970s or even the early 1980s.

- There are different types of semiconductors; market analysis of the industry usually divides them roughly into the following categories (see for example the World Semiconductor Trade Statistics Product Classification):

- Discretes — mainly diodes and transistors. Power diodes and transistors are growing in sales; signal diodes and transistors are getting rarer but are occasionally used as “glue” circuitry.

- Logic — this covers FPGA and “application-specific integrated circuit” or ASIC and the system-on-chip (SOCs) found in cell phones.

- Microcomponents (general-purpose processors)

- MPU — microprocessor unit. Here’s where your PC or server processor gets counted, probably also the GPU for driving displays.

- MCU — microcontroller unit. These are embedded processors which usually have memory built-in and specialized peripherals, and they go into almost everything electronic these days.

- DSP — digital signal processors

- Memory — SRAM, DRAM, EEPROM, Flash, etc.

- Analog — op-amps, comparators, voltage references, voltage regulators and other power management ICs, ADCs, DACs, etc., and sometimes interface ICs for communications.

- Optoelectronics — LEDs, optoisolators, image sensors, etc. These usually use materials other than silicon.

- Sensors — gyroscopes/accelerometers, pressure/force/strain sensors, humidity sensors, magnetic field sensors, etc. (Temperature sensors are probably counted as analog.)

- Others that may not fit into the above groups, depending on who defines the categories. (RF transceivers, for example)

- Technological development of manufacturing processes has improved rapidly over the decades to decrease the feature size (sometimes referred to “technology node” or “node” or “process node”) found on ICs, that permits more and more transistors per unit area.

- Terms like “leading edge” or “trailing edge” or “mature” are used to describe feature sizes relative to the state-of-the-art at the time. (Leading-edge are the smallest.)

- The different types of ICs require different manufacturing processes, and have different dynamics with respect to technological advance and obsolescence.

- Memory, logic, and MPUs are the bulk of what’s driving the leading edge. Designs in these categories last a few years and then become obsolete by newer designs.

- Analog and discretes are longer-lived technology manufactured on older processes.

- Manufacturing facilities or “fabs” are extremely expensive, getting more so as the technology advances.

- Cost per transistor has been getting less and less expensive. (until, perhaps, recently)

- Increasing capacity by building new fabs is a risky proposition that requires predicting demand several years into the future.

- The cost to design an integrated circuit has been increasing as the technology advances, and permits more complex ICs on smaller process nodes.

- Semiconductor companies now sometimes outsource their fabs, and they are organized into several categories:

- Foundries — these are companies that run fabs to manufacture IC die for other companies

- Integrated device manufacturer (IDM) — these companies run their own fabs

- Fabless — these companies do not own their own fabs and rely on foundries

- Fab-lite — these companies may rely on a mixture of their own fabs and on external foundries

- Semiconductor companies are in an extremely competitive business; here are some reasons that companies go out of business or get acquired by others:

- Not innovating enough to retain a technological advantage

- Not controlling costs

- Making poor predictions of demand

- Taking on too much debt

That’s the three-minute boring version.

If you’re interested in some more in-depth Serious Summaries of the semiconductor industry, here are a few resources for further reading:

- Accenture, Harnessing the power of the semiconductor value chain (December 2021) — not a bad read.

- Jan-Peter Kleinhans & Dr. Nurzat Baisakova, The global semiconductor value chain (October 2020)

- Clair Brown and Greg Linden, Chips and Change, 2009. Sadly out of print, but worth buying a used copy. Paul McLellan has a nice summary on his EDAgraffiti blog.

The technological pace of innovation is something that makes the semiconductor industry different from almost every other industry, except for maybe magnetic disk storage. You’ll hear the term “Moore’s Law” which means that each year we can cram more transistors onto a chip, and that each year we can see more cat videos faster, as long as we buy a new phone, because the old one is no longer supported. Moore’s Law is a double-edged sword; in addition to making new electronics better every year, it fosters obsolescence in its wake. As I mentioned in the beginning of this article, Bell Laboratories invented the transistor in 1947, when there were no cat videos, but Bell Laboratories is a shell of its former self, and is no longer in the semiconductor business. Shockley Semiconductor never really succeeded, and didn’t make it past the 1960s. There are a lot of other defunct semiconductor manufacturers that have failed, sold off or closed their semiconductor divisions, or were gobbled up, and did not make it to the age of cat videos; the early decades of the industry are full of them: Mostek, Rheem Semiconductor, Signetics, Sprague Electric, Sylvania, Westinghouse Electric, etc. To succeed in the semiconductor industry requires not only business acumen, and sometimes a lot of luck, but also a never-ending stream of innovation — if any of those ingredients are lacking, it can send a company off to the chopping block.

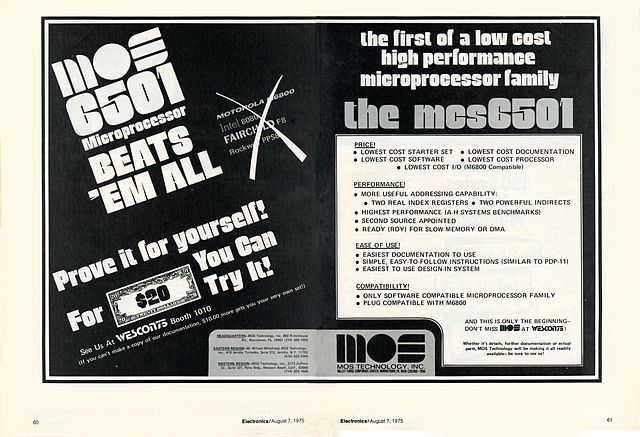

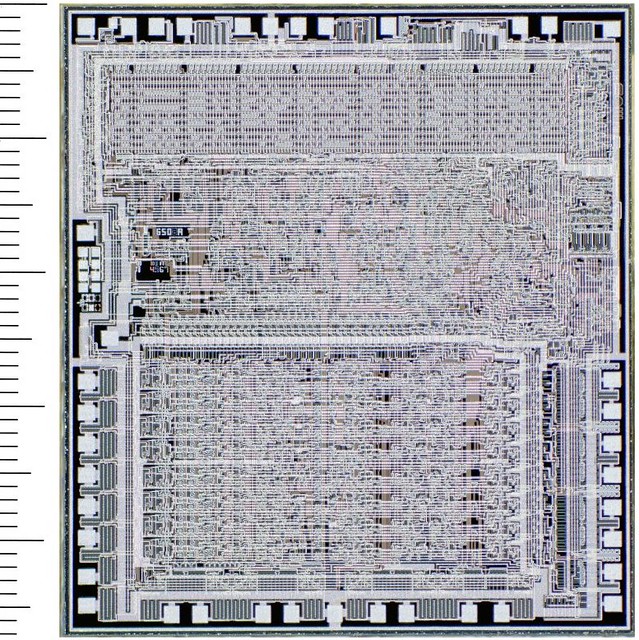

My three-minute boring description of the semiconductor industry is rather abstract; I’d prefer instead to give an example. So with that in mind, here is one particular chip, a groundbreaking design that powered almost all of those early 8-bit computers in 1983, the MOS Technology MCS 6502.

Photomicrograph © Antoine Bercovici (@Siliconinsid), reproduced with permission

Semiconductor Fabrication and the 6502

The 6502 was first delivered to customers in September 1975. This was one of a few iconic microprocessors of the late 1970s and early 1980s. To understand how big of an impact this chip had, all you have to do is look at its presence in many of the 8-bit systems of the era, sold by the millions:

- Apple II

- Atari 400 and Atari 800

- Atari VCS (6507)

- BBC Micro

- Commodore PET and VIC-20

- Commodore 64 (6510)

- Commodore 128 (8502)

- Nintendo Entertainment System (Ricoh 2A03)

The eventual ubiquitousness of the 6502-based personal computer was the end result of a long process that began thanks to Motorola for its pricing intransigence of the 6800 processor, and to Chuck Peddle and Bill Mensch for getting frustrated with Motorola. In March 1974, Motorola had announced the 6800, but did not reach production until November 1974, initially selling the chip for \$360 per processor in small quantities. Chuck Peddle had been giving marketing seminars to large customers in early 1974 — he’d smelled opportunity and tried to convince Motorola to pursue a lower-cost version for the industrial controls market, but they weren’t interested.[1 page 24-26] By August, Peddle had hatched a plan, leaving Motorola and setting off across the country to join MOS Technology, a scrappy little integrated circuit manufacturer located near Valley Forge, Pennsylvania. Mensch, one of the 6502 designers who went to MOS with Peddle, says this: “The environment was a small company where Mort Jaffe, John Paivinen, Don McLaughlin, the three founders, had created small teams of very capable calculator chip and system designers, a quick turn around mask shop and a high yielding large chip manufacturing team out of TI. So you go from Motorola with, relatively speaking, an unlimited budget for design and manufacturing, to an underfunded design team with very limited design tools for logic and transistor simulation. We had to manually/mentally simulate/check the logic and use very limited circuit simulation. In other words, it was really low budget. The datasheets and all documentation was done by the design team.”[2][3] Peddle persuaded Mensch and six other Motorola engineers — Harry Bawcom, Ray Hirt, Terry Holdt, Michael Janes, Wil Mathys, and Rod Orgill — to join him and a few others at MOS in designing and producing what became the MCS 6501/6502 chipset. “At MOS John Paivinen, Walt Eisenhower, and Don Payne, head of the mask shop, and mask designer Sydney Anne Holt completed the design and manufacturing team that created the high yielding NMOS depletion mode load process,” says Mensch. “The result was the MCS 6501/6502, 6530/6532 Ram, ROM Timer and IO combo and 6520/6522 PIA/VIA microprocessor family.”[3]

Some technical details of the 6502 are slightly fuzzy after so much time has passed — but I have chosen to focus on the 6502 because it is such a well-known processor, and at least some details are available. Semiconductor manufacturers are notoriously secretive, and it is hard to find detailed descriptions of how modern ICs are designed and manufactured. Whereas there are plenty of sources of information about the 6502.

(A word about the numbered notes: I don’t normally use such things, preferring instead a blogorrhific style of adding hyperlinks all over the place to point towards further information on various topics. But in this article, I have used notes to cite my sources a little more formally, for a few reasons. First, because there are inaccuracies about the 6502 floating about on the Internet, I’m trying to be a bit more careful. And since I’m not an expert in semiconductor manufacturing or economics, I feel like I have to point toward some specific accounts that back up my statements. Finally, a citation is a little more robust than a hyperlink in case an online publication becomes unavailable.)

EDN had a nice technical writeup of the 6502 in September 1975. BYTE magazine covered the 6502 in November 1975, with more of a focus on its instruction set than the physical aspects of the chip itself. Mind you, both these articles predated the use of the 6502 in any actual computer.

The manufacturing process for semiconductors is like printing newspapers. Sort of. Not really. Maybe more like the process for creating printed circuit boards. Well, at any rate, newspapers and printed circuit boards and semiconductors have these aspects in common:

- Production requires a big complicated manufacturing plant with many steps.

- Photolithography techniques are used to create many copies of a master original.

- The master original requires creating content and layout that fits in a defined area.

Except that the semiconductor industry has been expanding for decades without any sign of letting up, whereas the newspaper industry has been struggling to survive in the age of the Internet.

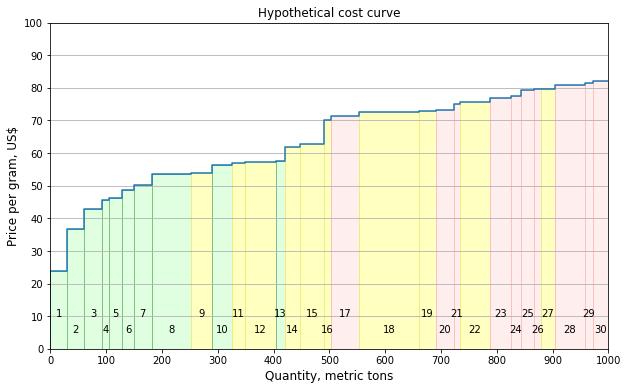

Semiconductor manufacturing occurs in a fabrication plant or “fab”. The raw, unpackaged product is called a die (plural = “dice” or “dies” or “die”), and the master original is called a photomask set or mask set. Engineers cram a bunch of tiny shapes onto the photomasks in the mask set; each of the photomasks defines a separate step in the photolithography process and is used to form the various features of individual circuit elements — usually transistors, sometimes resistors or capacitors — or the conducting paths that interconnect them, or the flat squarish regions called “bonding pads” which are used to connect to the pins of a packaged chip. Ultrapure, polished circular semiconductor wafers are used; most often these are made of silicon (Si), but sometimes they consist of other semiconductor crystals such as gallium arsenide (GaAs), gallium nitride (GaN), silicon carbide (SiC) or a hodgepodge of those elements somewhere towards the right side of the periodic table: AlGaAsPSnGeInSb. These wafers are sawn as thin slivers from a monocrystalline boule, basically a big shiny circular semiconductor salami, which is typically formed by pulling a seed crystal upwards, while rotating, from molten material, using the Czochralski process, which is very hard for us to pronounce correctly.

The wafers have a bunch of die arranged in an array covering most of the wafer’s surface; these are separated into the individual die, and go through a bunch of testing and packaging steps before they end up inside a package with conductive pins or balls, through which they can connect to a printed circuit board. The packaged semiconductor is an integrated circuit (IC) or “chip”. The percentage of die on a wafer that work correctly is called the device yield. Die size and yield are vital in the semiconductor industry: they both relate directly to the cost of manufacturing. If chip designers or process engineers can reduce the die area by half, then about twice as many die can be fit on a wafer for the same cost. If the yield can be raised from 50% to 100% then twice as many die can be produced for end use, for the same cost. Yield depends on numerous processing factors, and gets worse for large die ICs: each specific manufacturing process has a characteristic defect density (defects per unit area), so a larger die size raises the chance that a defect will be present on any given die and cause it to fail.

Think of defects as bullets that kill on contact. The figure below shows three simulated circular wafers with 40 defects in the same places, but with different die sizes. There are fewer of the larger die, and because each die presents a larger cross-sectional area which is prone to defects, the yield ends up being lower.

The steps of the photolithography process are performed under various harsh environmental conditions — 1000° C, high pressure or under vacuum, and sometimes with toxic gases such as silane or arsine that often react violently if exposed to the oxygen in air — and generally fall into one of the following categories:

- depositing atoms of some element onto the wafer

- coating the wafer with photoresist

- exposing the photoresist to light in a particular pattern (here’s where the photomasks come in)

- etching away material

- annealing — which is a heating/cooling process to allow atoms in the wafer to “relax” and lower crystal stress

- cleaning the wafer

And through the miracle of modern chemistry, we get a bunch of transistors and other things all connected together.

The term “process” in semiconductor manufacturing usually refers to the specific set of steps that are precisely controlled to form semiconductors with specific electrical characteristics and geometric tolerances. ICs are designed around a specific process with desired characteristics; the same process can be used to create many different devices. It is not a simple manner to migrate an IC design from one process to another — this is an important contributor to today’s supply chain woes.

Let’s look at that photomicrograph again:

Photomicrograph © Antoine Bercovici (@Siliconinsid), reproduced with permission

The original 6502 manufactured in 1975 contained 3510 transistors and 1018 depletion-load pullups, in a die that was 0.168 inches × 0.183 inches (≈ 4.27mm × 4.65mm), produced on a 3” silicon wafer.[4] The process used to create the 6502 was the N-channel Silicon Gate Depletion 5 Volt Process, aka the “019” process. Developed at MOS Technology by Terry Holdt, it required seven photomasks, and consisted of approximately 50 steps to produce these layers:[5][6]

- Diffusion

- Depletion implant

- Buried contact (joining N+ to poly)

- Polysilicon

- Pre-ohmic contacts

- Metal (aluminum)

- Passivation (silicon dioxide coating)

You can see these layers more closely in higher-resolution photomicrographs — also called “die shots” — of the 6502. Antoine Bercovici (@Siliconinsid) and John McMaster collaborated on a project to post 6502 die shots stitched together on McMaster’s website, where you can pan and zoom around. (If you look carefully, you can find the MOS logo and the initials of mask designers Harry Bawcom and Michael Janes.) I think the most interesting area is near the part number etched into the die:

Photomicrographs © Antoine Bercovici (@Siliconinsid), stitched and hosted by John McMaster.

Annotations are mine. There is a small photo-stitching discontinuity between the top 1/3 and bottom 2/3 of this image.

The large squarish features are the bonding pads, and are connected to the pins of the 6502’s lead frame with bond wires that are attached at each end by ultrasonic welding, sometimes assisted by applying heat to the welding joint. (I got a chance to use a manual bond wiring machine in the summer of 1994. It was not easy to use, and frequently required several attempts to complete a proper connection, at least when I was the operator. I don’t remember much, aside from the frustration.)

The little cross and rectangles are registration marks, to align the masks and check line widths. The larger squares above them are test structures, which are not connected to any external pins, but can be checked for proper functioning during wafer probing.

Photomicrograph © Antoine Bercovici (@Siliconinsid), reproduced with permission.

This image is a composite of two photographs taken at the same 200× magnification: the 6502 die on the left, and a scale on the right with 10 microns per division (100 microns between major tickmarks).

The different layers have different visual characteristics — except for the depletion layer — in these images:

- the silicon substrate is an untextured gray

- the aluminum metal has a granular quality

- it has a pinkish tinge when it has been covered by the passivation layer (most of the die)

- when uncovered, as in the bonding pads and test pads, it is a more gray color

- the small green dots represent contacts between metal and silicon

- diffusion regions have a glassy look with discoloration around the edge

- polysilicon shows up as light brown, except when it crosses through a diffusion region, where it is greenish and forms a MOSFET gate — Tada! instant transistor! — controlling whether current can flow between the adjacent diffusion regions. (Ken Shirriff has some more detailed explanations with images for some features of the 6502.)

How many chips are on a wafer? It’s hard to find that information for the 6502, but Wikipedia does have a description of the Motorola 6800:

In the 1970s, semiconductors were fabricated on 3 inch (75 mm) diameter silicon wafers. Each wafer could produce 100 to 200 integrated circuit chips or dies. The technical literature would state the length and width of each chip in “mils” (0.001 inch). The current industry practice is to state the chip area. Processing wafers required multiple steps and flaws would appear at various locations on the wafer during each step. The larger the chip the more likely it would encounter a defect. The percentage of working chips, or yield, declined steeply for chips larger than 160 mils (4 mm) on a side.

The target size for the 6800 was 180 mils (4.6 mm) on each side but the final size was 212 mils (5.4 mm) with an area of 29.0 mm². At 180 mils, a 3-inch (76 mm) wafer will hold about 190 chips, 212 mils reduces that to 140 chips. At this size the yield may be 20% or 28 chips per wafer. The Motorola 1975 annual report highlights the new MC6800 microprocessor but has several paragraphs on the “MOS yield problems.” The yield problem was solved with a design revision started in 1975 to use depletion mode in the M6800 family devices. The 6800 die size was reduced to 160 mils (4 mm) per side with an area of 16.5 mm². This also allowed faster clock speeds, the MC68A00 would operate at 1.5 MHz and the MC68B00 at 2.0 MHz. The new parts were available in July 1976.

The MOS Technology team seized the opportunity and beat Motorola to production with a depletion-load NMOS process (“regular” enhancement-mode N-channel MOSFETs acted as pull-down switches; depletion-mode N-channel MOSFETs were used as a load, with their gate and source tied together to act as a current source) in the 6502, which allowed the design team to achieve higher performance in a smaller die size.

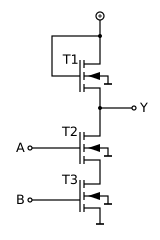

Left: NAND gate with saturated enhancement-load NMOS. Right: NAND gate with depletion-load NMOS. In both cases, T1 acts as a current source, so that the output Y is low only if both A and B are high, but the depletion-mode version of T1 maintains strong current-source behavior at a higher output voltage than the enhancement-mode version, achieving faster rise times for the same static power dissipation. (Images courtesy of Wikipedia)

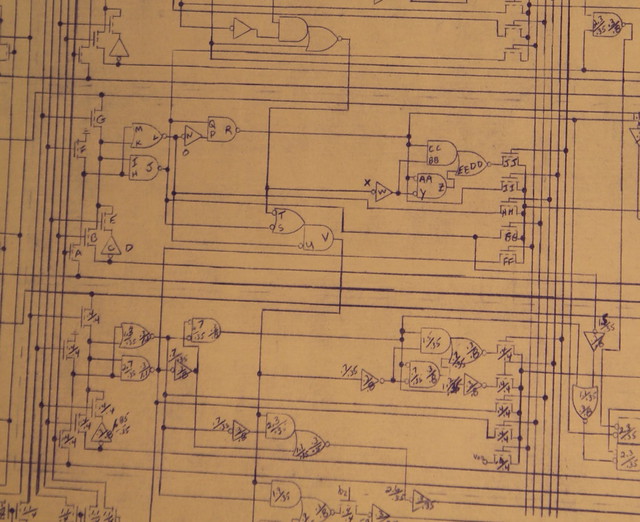

For the most part, design of the 6502 was paper-and-pencil, with some computer-assisted aspects of layout. Peddle was project leader, and focused on the business aspects; he also worked on the instruction set architecture — basically the abstract programmer’s model of how the chip worked, including the various opcodes — with Orgill and Mathys.[7]

To reduce this to a working circuit design, the 6502 team had to come up with a digital design of instruction decoders, arithmetic/logic unit (ALU), registers and data paths (high-level register-centric design) that could be implemented using individual gates made out of the NMOS transistors and depletion loads (low-level circuit design). Peddle, Orgill, Mathys, and Mensch worked out the register structure and other sections of the high-level design,[1 page 28][8] with Mathys translating a sequence of data transfers for each instruction into state diagrams and logic equations.[8] Mensch and Orgill completed the translation of the register-centric design from logic equations into a circuit schematic (technically known as the “650X-C Microprocessor Logic Diagram”[9]) of the NMOS transistors and depletion loads, annotated with dimensions, while Wil Mathys worked on verifying the logic.[10]

Mensch describes Orgill and himself as “semiconductor engineers”, responsible for reducing logic equations to transistor-level implementation in an IC to ensure that it meets speed, size, interface compatibility, and power specifications.[11] Orgill’s specialization was on the high-level architecture, contributing to the ISA, with “a focus on logic design and minimization”,[11] whereas Mensch had a predilection for low-level details. Mensch determined the design rules, ran circuit simulations on portions of the chip — limited to around 100 components at a time with the computation facilities available to MOS Technology in 1975 — and designed in the two-phase clock generator that would become the distinguishing factor between the 6501 and the 6502.[11][12 page 19] (The 6501 and 6502 shared all masks except for the metal layer, which had two slightly different versions: the 6501 left the two-phase clock generator disconnected so that it was pin-compatible with the Motorola 6800, whereas the 6502 connected the clock generator circuitry, breaking pin-compatibility. In 1976, MOS Technology agreed to cease production of the 6501 as a condition of a legal settlement with Motorola.[13])

Orgill and Mensch drew the schematic on mylar, using pencils with plastic lead[14] which could be erased. I found a book that described drafting on mylar this way:[15]

Drawing on mylar for the first time can be a scary experience — both for the novice designer and the company. The surface of mylar drafting film holds drawing lead much more loosely than the fibers in paper. If you were to draw on mylar with a regular graphite “lead” pencil, the disastrous results would be like drawing on a sheet of frosted glass with a charcoal briquette. You could form the lines, but they wouldn’t be very durable against smudges.

To compensate for this lack of adhesion, special plastic lead was developed specifically for use on mylar drafting film. Instead of being made from graphite, this “lead” is made of a soft waxy plastic compound. It comes in varying degrees of hardness just like regular drafting pencils. The softest designation is E0, and they progress in hardness with E1, E2, E3, etc.

Here is one section of the schematic, showing a section of the ALU; the dashed lines each surround one bit of the ALU.[16]

Photo of a portion of the Hanson copy of the 650X-C Microprocessor Logic Diagram, taken by Jason Scott.[17]

The annotations here include two types. The letters A-Z and AA-JJ, according to Mensch, denoted individual transistors for the purposes of checking correctness in the layout.[16] The numbers indicate transistor dimensions, in mils (thousandths of an inch), and are listed in two forms:[14]

- A single number denotes NMOS gate widths, with a standard gate length of 0.35, the minimum used in this design

- A pair of numbers W/L with a dividing line denotes gate width and length — current in the transistor is proportional to W/L, which determines how small and how fast each transistor is.

The transistor at the output of a gate is a depletion-mode pull-up, with the others as enhancement-mode transistor inputs[14] — so, for example, the NOR gate with transistors AA and Y as inputs had gate widths of 0.7 mil and length of 0.35 mil, and a depletion-mode pull-up of 0.3 mil width and 0.8 mil length. (In theory, someone could double-check this against Antoine Bercovici’s die photos of the 6502 rev A, by locating individual transistors and trying to find the corresponding transistors on the logic diagram… I have not, and leave this as an exercise for the industrious reader.)

The minimum gate length of 0.35 mil implies a technology node of 0.35 mil ≈ 8.9 micron for the 6502.

There are a few other interesting things visible from the schematic — the use of dynamic logic, for example. Anytime you see clock signals (ϕ1 and ϕ2 are the two-phase clock signals on the 6502) doing weird stuff, where some logic gate doesn’t have any driving input part of the time, you know you’ve got dynamic logic going on. (Wikipedia says “Dynamic logic circuits are usually faster than static counterparts, and require less surface area, but are more difficult to design.”) What caught my eye was the “T” and “B” on these AND gate inputs shown below:

Photo of a portion of the Hanson copy of the 650X-C Microprocessor Logic Diagram, taken by Jason Scott.[17]

I asked Mensch about this; he said they stood for “top” and “bottom”, specifically referring to the implementation of an AND or NAND gate in depletion-mode NMOS.[16] Here’s a transistor-level implementation of that pair of AND gates followed by a NOR gate:

Transistors Q1 (top) and Q2 (bottom) would correspond to one T/B pair of AND-gate inputs, and Q3 (top) and Q4 (bottom) the other. This matters because switching speed is different for the top and bottom MOSFETs — the top ones have drain-to-gate capacitance slowing down the switching (the Miller effect), whereas the bottom ones see a low-impedance load from the top transistors, forming a cascode configuration. As to why that is critical here, I’m out of my element — Mensch says the bottom transistor should be the first transistor to change state, and the last signal to change should be the top transistor[18] — but the point here is that digital logic design is not just a nice little abstraction layer with ones and zeros based on simple, identical, combinational logic and flip-flops. A lot of work went into choosing transistor sizes to get the 6502 to work fast under die size constraints.

The schematic also served as a rough layout known as a floorplan showing high-level placement, with the various gates arranged on the schematic roughly where Mensch thought they should go on the chip.[19] Bawcom, Holt, and Janes were mask designers for the 6502 chipset, taking the circuit design and placement and implementing them as individual transistors or resistors, made out of rectangular features sketched on various layers of Stabilene mylar film.

The mask designers did not draw these features directly by hand — when I first started reading historical accounts of the 6502 for this article, I had a mental image of them sketching transistors on Stabilene one by one, fitting together like a puzzle until the last pieces were drawn in… and dammit, there’s only enough room for seven flip-flops, not eight, so they’d have to start over and try again. But that’s not how it worked. Instead, the design was based on “cells”, small reusable pieces of the design that could be planned separately and then fit into place in the layout, like Escher tesselations all coming together, or some kind of sadistic furniture floor plan where the room is full of tables and chairs and sofas with no space between them. Harry Bawcom, who previously worked on bipolar TTL layout at Motorola and was brought in to finish the 6800 microprocessor layout,[20] described cells this way:[21]

Cell design started with little stickies of transistors underneath clear mylar and you did the first pass with a grease pencil and a lot of iteration. That was a Bipolar technique that the MOS folks didn’t use. Probably why I was five times faster. By the time I picked up a pencil I knew where I was going.

According to Mensch, these physical representations of cells used in drafting were also called “paper dolls”, a term that shows up every now and then in accounts of that era. Joel Karp, the first MOS chip designer at Intel, also used this term describing the rather painstaking layout process for the Intel 1102 and 1103 1024-bit DRAM ICs.[22] Another account, from New York’s Museum of Modern Art, described a Texas Instruments logic chip layout from around 1976:[23]

At the time this plot was hand drafted, it was still possible to verify the design of individual components visually. To repeat a circuit element multiple times, an engineer would trace the initial drawing of the component, photocopy it onto mylar, then cut and glue it onto the diagram. The collage technique is referred to as “paper-doll layout.” Intended for use in a military computer, this particular chip was designed to sense low-level memory signals, amplify the signals to a specific size, and then store them in a memory cell for later recall.

But the early microprocessor designs at Motorola and MOS Technology were just starting to emerge from the manual-only world. Here the computer-assisted aspects came into play: for the 6502, someone at MOS captured each cell on the Stabilene film using a Calma GDS workstation and digitizer.[24][12 page 12] (Bawcom refers to this person as the “Calma operator” but says he “did not witness this process at MOS Technology.”[21]) Where possible, the Calma workstation was used to replicate cells that could be repeated in the design.[21]

Digitizer table for capturing IC design at MOS Technology, titled "DATA INPUT FOR AUTOMATIC MASK PREPARATION" on a MOS Technology brochure from 1970, courtesy of Diana Hughes and Team6502.org.

The digitizer was a drafting table with a precision position sensor that could record x-y coordinates of any position on the table. The workstation was a Data General Nova minicomputer[25] with 5 megabyte hard drive and 16K RAM. The minicomputers at that time were created mostly out of standard logic chips (like the 7400 series) in DIP packages — each typically containing an array of 2-8 components like gates, registers, multiplexers, etc. — soldered onto circuit boards to make a processor and other associated sections. A cabinet-sized computer, rather than a room-sized mainframe. (If you haven’t read Tracy Kidder’s Soul of a New Machine, make a note to do so: it chronicles the design of the Data General Eclipse, the successor to the Nova.) The Calma GDS stored the layout design as polygons and could be used to draw the layout on a plotter, or to cut a photomask drawing out of a red film called Rubylith, also using the plotter, but with a precision blade used in place of a plotter pen.[12 page 12][26] Then the unwanted sections of Rubylith would be removed very carefully by hand during what MOS Technology engineers called a “peeling party”, according to Albert Charpentier.[26]

After a lot of very careful checking and revision, the set of Rubylith photomask drawings — shown in this picture from the August 25, 1975 edition of EE Times — were photographically reduced to a set of master glass reticles, one per mask, at 10 times actual size.[24] Each 10× reticle was used to reduce the design further, producing a 1:1 mask using a machine called a reduction stepper, which precisely locates multiple copies covering most of the 3-inch[24][27] wafer. In early production, contact or proximity masks were used,[12][28][29] but once MOS had been able to upgrade to four-inch wafers,[29] a Perkin-Elmer Micralign projection mask aligner[27][1] was used to scan the 1:1 mask bit by bit, using a clever symmetrical optical system, for lithography steps.[30]

The Micralign projection aligner was one of several reasons the 6502 team was able to succeed, by improving yields. (Remember: die size and yield are vital!) Motorola’s NMOS process yields were poor[31][32], giving them cost disadvantages. Mensch says that Ed Armstrong, Motorola’s head of process engineering at the time, grew out his beard, waiting to shave it until they were able to get 10 good die on a wafer.[12][19][10] The MOS team was able to get much higher yield than Motorola, in part by using a projection mask system: previous-generation lithography systems used contact masks, which touched the wafers and had limited durability. Motorola had used contact masks for the 6800.[1 page 22] From Perkin-Elmer’s Micralign brochure:[33]

Historically, the manufacture of integrated circuits involved placing the photomask directly in contact with the wafer during the exposure process. Repeated just a few times, this contact soon degraded the mask surface and the photoresist layer. Each defect that resulted was then propagated through the replication cycle. Consequently, masks were considered expendable, to be used between five and fifteen times and then discarded.

These problems led to several attempts at prolonging mask life. One was to make the photomask from harder materials that were more resistant to abrasion. Another was to reduce abrasion by reducing or even eliminating the contact force. These efforts did improve mask life to a limited extent, but neither was as effective as optically projecting the photomask image onto the wafer.

A second reason for the 6502’s higher yield was something MOS Technology referred to as “spot-knocking”[12 page 18], essentially a retouching of point defects in the masks.

The third reason for higher yields was through Mensch’s design rules — constraints on transistor size and feature spacing — which were conservative and much more tolerant of process variations,[19] a technique which he had learned on his own through experiences at Motorola, along with some lessons about what was and what wasn’t possible to achieve at the company.

Mensch’s first year at Motorola in 1971 was a rotation through four different departments: Applications, Circuit Design, Process Design, and Marketing.[34] At the Marketing department, his supervisor Dick Galloway asked him to put a quote together for IBM for memory chips over a seven year period, with pricing decreasing over time — a fairly complicated document, with lots of numbers that had to be typed accurately. So he decided rather than having a secretary type it up and go through the trouble of finding and correcting errors, he would write a FORTRAN program on the Motorola mainframe computer to take in parameters, plug in the numbers into some formulas, and print out the quote on a terminal with a thermal printer, which he then copied onto better paper. The Marketing staff asked him how he did it, and when he told them, Galloway said “Bill, we want you to work in the Design Group.” “Why is that?” “None of their chips work. We want you to work there. I think if you work there, the chips will work again.”[12][19]

As the new, inexperienced engineer in the IC design group, Bill Mensch’s introduction involved a lot of what the other engineers would call grunt work. Some of these efforts were to work on Motorola’s standard cell library in various MOS processes, and the process control monitor for memory and microcontroller designs.[24][12] The process control monitor (PCM) is a special set of test structures used to measure the parameters of basic circuit elements such as transistors, resistors, capacitors, and inverters — not only to make sure the manufacturing process is working as expected and check for statistical variation, but also to characterize these elements for simulation purposes. Nowadays it is typical to put those test structures in the scribe lines between ICs, since they can be so small, but in earlier IC designs the PCM is located in a few places on the wafer in place of the product, usually forming a plus-sign pattern of five PCMs. Early 6502 wafers from MOS Technology are — in 2022 at least — apparently nowhere to be found, but occasionally some later MOS wafers show up on eBay, and I did find a creator of “digital art”, Steve Emery at ChipScapes, who had a 4-inch Rockwell R6502 wafer, apparently from the mid 1980s (Synertek and Rockwell were both licensed by MOS Technology as a second-source for the 6502) on which you can see the PCMs. He was kind enough to take some photomicrographs of them for me:

Ray Hirt designed the PCMs for the MOS 6502[24]; the Rockwell PCMs shown here are almost certainly not the ones Hirt designed in 1974-1975, but the overall concept is the same. The Rockwell R6502 has two different types, three of one type in the middle rows of the wafer, and two of another type in the top and bottom.

The ones on the top and bottom look like an image resolution test on the various layers; there are no electrical connections:

The three others have a bunch of circuit pads connected to various test elements:

The PCMs that Mensch and Hirt designed included transistors of various dimensions, digital inverters, and ring oscillators. The inverter could be used to measure the input-output transfer function; the ring oscillator for measuring intrinsic time delays. The transistors typically included a minimum-size transistor (0.4 mil × 0.4 mil ≈ 10 μm × 10 μm in the early 1970s), and others with different widths and lengths, so that the parameters of the transistors could be characterized as a function of geometry.[2] In a 2014 interview, Mensch describes the PCMs during his early days at Motorola this way:[12]

We had to make some changes to model because of things I found. And I found that narrow transistors had a higher voltage threshold than a short one, and these are things that the memory product guys didn’t use. And so they had to change their design because of what I found on the process control monitor. I put very narrow transistors, very wide transistors, very large transistors, and very short transistors, so I knew the characteristics and what the actual sizing might have an effect on.

When I spoke with him in March, he described his experience a bit more candidly. As a young engineer at Motorola trying to learn the best way to design ICs, Mensch wanted to know what numbers to use for a transistor simulation model, so he asked around, and each of the design engineers had different numbers they used in their calculations; a typical exchange went like this:[2]

Mensch: Why are you using those numbers?

Engineer: Well, I just think it’s the right number.

Mensch: Yeah, but… but… who’s… who’s giving out the numbers? What temperature are you simulating it at?

Engineer: Well… at room temperature.

Mensch: Well, why room temperature?

Engineer: Well, that’s what we take the data on.

Mensch: Yeah, but you know it’s gonna run at 125°C, right? And minus 55, we need to get them to work that way.

Engineer: Yeah, but… whatever.

Mensch’s voltage threshold discovery — that gate threshold voltage on the same process varied with transistor geometry, and a good model would have to take this into account — was not immediately well-received; at first, engineers from the memory group didn’t believe him. He ended up sending around an inter-office memo to call a meeting (“MEETING TO PICK BILL’S SIMULATION NUMBERS”) and got everybody to attend by the happy accident of including Jack Haenichen on the cc: list of the memo. Haenichen was Motorola’s youngest vice-president, first elected in 1969 to become Vice President and Director of Operations, Services and Engineering in Motorola’s Semiconductor Products Division, at the age of 34; in early 1971 he was renamed to Director of Operations for MOS.[35][36][37] Haenichen had taken an interest in Mensch’s progress during his rotation in the Marketing department, and asked to be kept informed how things were going. As Mensch described it: “So this interoffice memo, everybody would see, ‘Hey, Jack’s on this list! Oh, we gotta show up.’ I never realized why all these people showed up at my meeting.” He eventually chose simulation parameters that were the worst case of all the other numbers.[2]

Over the next few years, an opportunity had begun to arise. Mensch was no longer a green engineer; by 1974, he had designed the 6820 Peripheral Interface Adapter, and he and Rod Orgill had worked together on design teams for two microprocessors at Motorola — the 5065, a custom microprocessor for Olivetti, and the Motorola 6800.[3] Mensch also had designed the PCM for the 6800, and put in test structures not only for the enhancement-load process of the 6800, but also for a depletion-load process, all ready to help prove out the superiority of the concept, just by making a slight change in the masks and the processing steps.[12] Meanwhile, Chuck Peddle had joined Motorola, and in 1974 was traveling the country giving seminars on the 6800 for prospective customers, who were very interested, but not at the price Motorola was offering. Peddle wanted to pursue a lower-cost version of the 6800.[1] Motorola had advantages in financial resources; the company’s 1972 Annual Report stated proudly that its revenues exceeded a billion dollars for the first time, and “Metal-oxide-semiconductor (MOS) integrated circuit sales for Motorola during ’72 grew at a faster rate than the world industry, whose growth was an estimated 60-70%.”[38] In 1973’s Annual Report, it stated \$1.437 billion in revenue, with the company’s Semiconductor Products Division reporting revenue “up more than 45% over the previous year”, and expressed an optimistic view of the microprocessor market:[39]

The burgeoning microprocessor market is presenting the industry with a radical opportunity to engineer into electronic systems significant benefits not previously possible. The true extent to which microprocessors will be adopted is not yet apparent, even though the current picture indicates a possibly phenomenal market whose growth rate could eclipse that of today’s fastest growing semiconductor categories. Motorola has a major commitment to the microprocessor market, and we intend to secure a significant share. Development in this area has reached an advanced stage.

Motorola had already been in the electronics business for decades — starting with car radios in 1930 and getting into the semiconductor market with mass-production of germanium power transistors in 1955 — with a well-established sales and distribution network. It had the tools and staff to design and manufacture cutting-edge microprocessors.

So why was the low-cost 8-bit microprocessor a project at MOS Technology instead of Motorola?

The Elephant and the Hare

I have struggled to understand: Why not at Motorola? Motorola had all these resources, and an opportunity to follow up on the 6800, but at first glance appears to have squandered the opportunity.

Motorola and MOS Technology were two very different companies. In Motorola’s case, being a large company gave it significant long-term advantages, in the form of product diversity — Motorola was nearly a self-contained “supermarket” for the circuit designer, with discrete, analog, and digital ICs, so it benefited from many market trends in electronics — and inertia. Its size allowed Motorola some freedom to “coast”, when necessary, on its past successes. MOS Technology was small and agile, and had to survive by being competitive in a few specific areas like MOSFET-based IC design and manufacturing technology. A business failure of a few million dollars would have been a minor setback for Motorola, but a mortal wound for MOS.

A 1970 ad campaign describes “Motorola’s Ponderous Pachyderm Syndrome”,[40] something that seems like incredibly poor marketing:

Haenichen described in an interview:[41]

Motorola, at the time, was called the “Ponderous Pachyderm” by the industry people. In other words, we maybe were not the “latest and greatest” but when we started making something, we wiped everybody out, because we just made them by the billions — that was our reputation, slow moving but good.

Yeah, um… okay. I get the idea. Take a little longer and become a dominant player in the industry… sure. But a “ponderous pachyderm” as high-tech corporate metaphor? Not exactly the most inspiring.

And yet, if we fast-forward to the 1980s and 1990s: Motorola did find success in its microprocessor offerings, reaching its zenith a few years after the 6800 and its follow-up, the 6801 — in the form of the 68000 series, which were produced roughly from 1979 - 1994 and used in many systems, notably the Apple Macintosh. And later 6800-series ICs like the 68HC11 took a prominent position in the microcontroller market.

Even by early 1980, the 6800 and 6809 achieved market success. While looking for historical pricing information in Byte Magazine’s January 1980 issue, I came across several ads for third-party systems and software tools for the 6800 and 6809. The chip distributor ads in the back of the magazine listed various microprocessors, almost all in the \$10 - \$20 range, including the Zilog Z80, the 6502, the 6800, RCA’s CD1802, and Intel’s 8080. Motorola had been able to lower the cost of the 6800.

But 1974 was a different story. With a major economic recession looming, Motorola’s Semiconductor Products division turned more risk-averse, and focused on getting the 6800 out the door successfully. Mensch, who had worked on the 6800’s process control monitor, and snuck in a depletion-load version in addition to the normal enhancement-load PCM, was pushing to have one wafer ion-implanted to try out the depletion-load process. When he talked to Armstrong (head of process engineering) he was finally told why they wouldn’t let him investigate depletion-mode: “We were afraid you wouldn’t complete the designs with enhancement mode.”[19]

Tom Bennett, who led the chip design of the 6800, described relying on depletion loads as “a little risky”:[31]